- Services

Technology Capabilities

Technology Capabilities- Product Strategy & Experience DesignDefine software-driven value chains, create purposeful interactions, and develop new segments and offerings.

- Digital Business TransformationAdvance your digital transformation journey.

- Intelligence EngineeringLeverage data and AI to transform products, operations, and outcomes.

- Software Product EngineeringCreate high-value products faster with AI-powered and human-driven engineering.

- Technology ModernizationTackle technology modernization with approaches that reduce risk and maximize impact.

- Embedded Engineering & IT/OT TransformationDevelop embedded software and hardware. Build IoT and IT/OT solutions.

- Industries

- GlobalLogic VelocityAI

- Insights

BlogsDecember 16, 2024Gene LeybzonAccelerating Digital Transformation with Structured AI Outputs

This code produces the following output that can be imported into the candidate trackin...

BlogsOctober 30, 2024Yuriy Yuzifovich

BlogsOctober 30, 2024Yuriy YuzifovichAccelerating Enterprise Value with AI

Discover how financial services integrations are transforming from standalone offerings...

- About Us

Press ReleaseGlobalLogicMarch 11, 2025GlobalLogic Launches VelocityAI to Harness the Power of AI, ...

VelocityAI combines advanced AI technologies with human expertise, helping businesses r...

Press ReleaseGlobalLogicJanuary 10, 2025

Press ReleaseGlobalLogicJanuary 10, 2025GlobalLogic Announces Leadership Change: Srini Shankar Appointed ...

SANTA CLARA, Calif.–January 10, 2025– GlobalLogic Inc., a Hitachi Group Com...

- Careers

Published on August 18, 2023Types of Migration & Tips for Digital Transformation Success

View all articles Sujatha MalikPrincipal ArchitectShareRelated Content

Sujatha MalikPrincipal ArchitectShareRelated Content GlobalLogic25 June 2025

GlobalLogic25 June 2025 GlobalLogic25 June 2025View All Insights

GlobalLogic25 June 2025View All Insights GlobalLogic25 June 2025

GlobalLogic25 June 2025Let's start engineering impact together

GlobalLogic provides unique experience and expertise at the intersection of data, design, and engineering.

Get in touchAnalyticsData EngineeringCross-IndustryEnterprises envision a cutting-edge new system as their envisioned future state; when the outdated system has been phased out, the novel system takes over, and legacy data is managed while seamlessly integrating new data. In a successful digital transformation, this new system garners widespread approval from the extensive target audience, too.Sounds great, right? Unfortunately, this isn’t always a smooth process, and there’s no guarantee of a successful outcome. According to McKinsey, a staggering 70% of digital transformations end in failure. This statistic paints a concerning picture, particularly when we consider that a significant portion of these failures can be attributed to unsuccessful migration endeavors.

It’s no wonder business leaders tend to get the “heebie jeebies” – a slang term meaning a state of nervous fear and anxiety – when it comes to migration. Often, migrations suffer from poor planning or exceed their allotted timeframes. In this article, we explore four different types of migration and share strategies to alleviate these apprehensions and combat the factors that can interfere with a migration’s success.

(Note: Within the context of this article, migration encompasses more than just data transfer; it encompasses a comprehensive system transition.)

Types of Migration

First, let’s explore four types of migration your organization might consider as part of its digital transformation.

Conventional Data Migration

Conventional data migration involves exporting data from source systems into flat files, followed by the creation of a program to read these files and subsequently load the data into the target system. It represents a more compartmentalized approach, suitable for scenarios where the disparity between the source and target data schema is minimal and the volume of data to be migrated remains relatively modest.

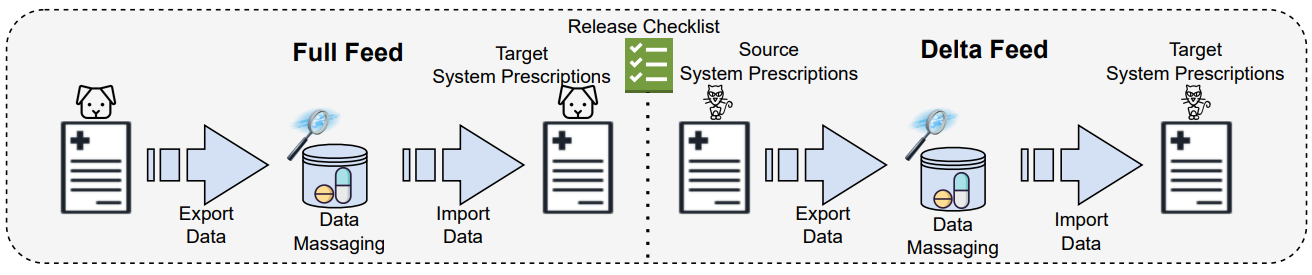

Here’s a real-life scenario in which an online pet pharmacy enterprise transitioned from an existing pharmacy vendor to a new one.

The groundwork was meticulously laid out for the new system, complete with a switch poised to be activated once the new system was infused with data. During the new pharmacy vendor’s launch, a migration task involving approximately 90,000 prescriptions from the old vendor’s database to the new one awaited. While not an overwhelming data load, it was substantial enough to warrant a deliberate decision. Consequently, the choice was made to employ the conventional data migration method.

The data was extracted from the previous vendor and handed over to our team. We meticulously refined the information, converting it into a format compatible with the new vendor’s system for seamless import. This comprehensive procedure was practiced and refined over the span of several months. The planning was executed with exact precision, carefully scheduling both full data feeds and incremental data updates. To ensure meticulous execution, we crafted a release checklist that enabled us to monitor and manage every step of the migration journey. Remarkably, the entire process unfolded seamlessly, maintaining uninterrupted service for the online pet pharmacy store’s end users.

Recommended reading: Easing the Journey from Monolith to Microservices Architecture

Custom Migration

In some cases, a migration process can become exceptionally intricate, demanding the establishment of a dedicated system solely for this purpose. This specialized software system, crafted specifically for the migration endeavor, follows its own distinct lifecycle and will eventually be retired once its mission is fulfilled.

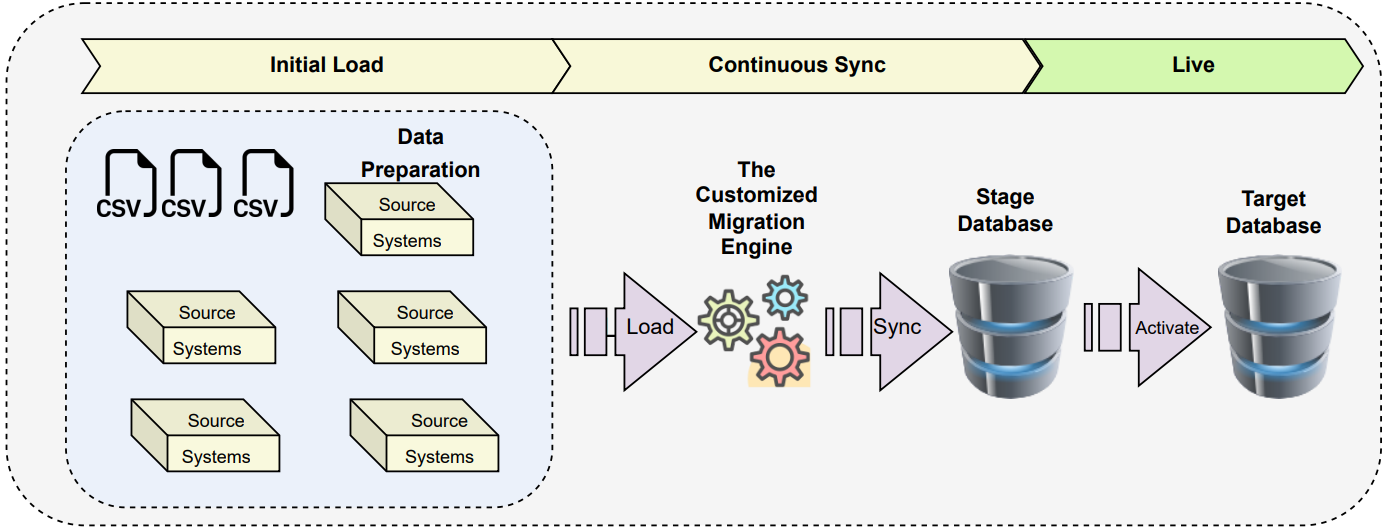

Within the dynamic realm of the online travel industry, one of our clients is gearing up for a monumental migration undertaking. The intricacy of the issue at hand and the sheer volume of data involved necessitated the adoption of a highly customized service.

This bespoke solution was designed with a singular objective: to stage and subsequently transfer the data to the new system at the precise moment of user activation.

The sheer scale of this migration project is staggering, with the number of records to be migrated reaching the monumental figure of 250 million.

The existence of diverse source systems stands out as a key driver behind the adoption of this distinctive migration approach. This tailor-made service functions as a robust engine, adeptly assimilating data from various sources and meticulously readying it for integration into the staging database. Subsequently, the shift to the new system becomes a seamless transition, executed during runtime upon activation request. This precision-engineered and finely tuned custom solution sets the stage for the client’s journey toward a more enhanced operational landscape.

Data Migration Aided by Technology

Now, let’s envision taking the conventional data migration process and enhancing it with the power of modern automation through cutting-edge technology stacks. Picture the benefits of having tools seamlessly handle error handling, retries, deployments, and more. The prospect of achieving migration with such automated prowess might appear enticingly straightforward. However, there’s a twist. The success of this approach hinges on meticulous planning and agility, qualities that tools can aid in monitoring but ultimately require the deft touch of a skilled practitioner.

Several cloud services can assist in automating the various steps of migration. While I’m leaning toward an AWS PaaS-first approach here, it’s important to note that other leading cloud providers offer equivalent tools that are equally competitive.

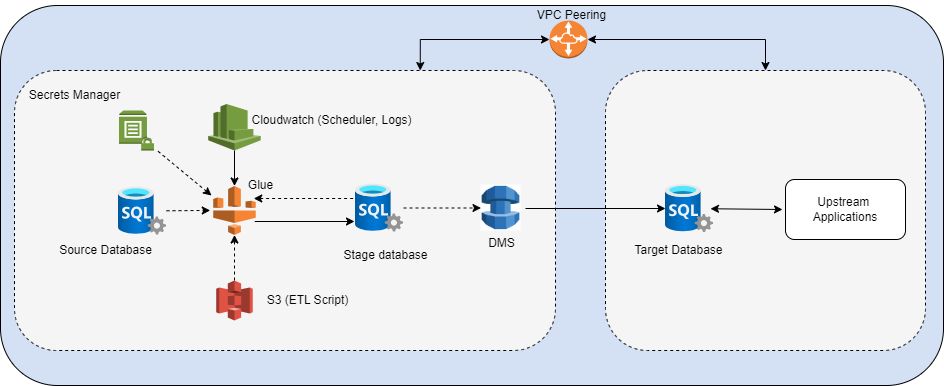

The key components within such a migration system include:

- AWS Glue: AWS Glue serves as a serverless data integration service, simplifying the process of discovering, preparing, and amalgamating data.

- AWS S3: AWS Simple Storage Service proves invaluable for storing all ETL scripts and log storage.

- AWS Secret Manager: AWS Secret Manager ensures secure encryption and management of sensitive credentials, particularly database access.

- AWS CloudWatch: CloudWatch Events Rule plays a pivotal role in triggering scheduled ETL script execution, while CloudWatch Logs are instrumental in monitoring Glue logs.

- AWS DMS: AWS Database Migration Service (AWS DMS) emerges as a managed migration and replication service, enabling swift, secure, and low-downtime transfers of database and analytics workloads to AWS, with minimal data loss.

With the utilization of these services, let’s delve into how we can effectively execute the migration process:

This presents a straightforward workflow, leveraging AWS Glue, to facilitate data transfer from source to target systems. A crucial requirement for the successful execution of this workflow is establishing VPC peering between the two AWS accounts. It’s worth noting that there could be instances where client infrastructure constraints hinder such access. In such cases, it’s advisable to collaborate closely with the infrastructure team to navigate this challenge.

The process unfolds as follows: data undergoes transformation and finds its place within the stage database. Once the data is primed for activation, it is then seamlessly transferred to the target system through the utilization of AWS DMS.

While these tools undoubtedly streamline our development efforts, it’s essential to grasp how to harness their full potential. This aspect represents the simpler facet of the narrative; the true complexity arises when we engage in data validation post-migration.

On-Premises to Cloud Migration

This migration is the epitome of complexity – a quintessential enterprise scenario involving a shift from on-premise servers to cloud servers. The entire process is facilitated by a plethora of readily available solutions proffered by cloud vendors. A prime example is the AWS Migration Acceleration Program (MAP), an all-encompassing and battle-tested cloud migration initiative forged from our experience migrating myriad enterprise clientele to the cloud. MAP equips enterprises with cost-reduction tools, streamlined execution automation, and a turbocharged path to results.

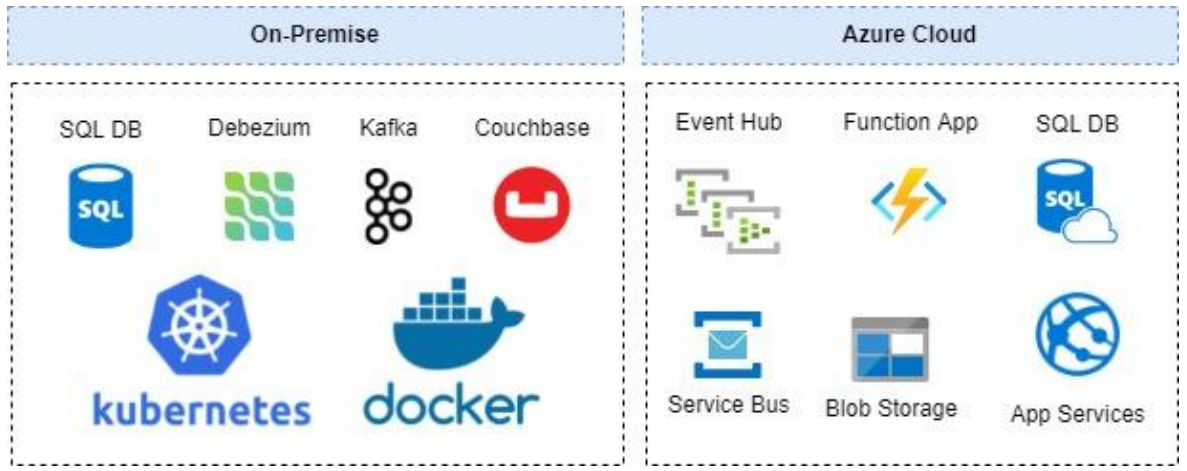

Our collaboration extended to a leading authority in screening and compliance management solutions, embarking on a transformative journey. Among the ventures undertaken for this partner was the formidable Data Migration and 2-Way Sync project. The essence of this endeavor was to engineer a high-performance two-way synchronization strategy capable of supporting both the existing features of the On-Premises solution and those newly migrated to a novel, service-oriented framework on Azure. Furthermore, this solution was compelled to gracefully manage substantial volumes of binary content.

Take a look at the tech stack used for this migration:

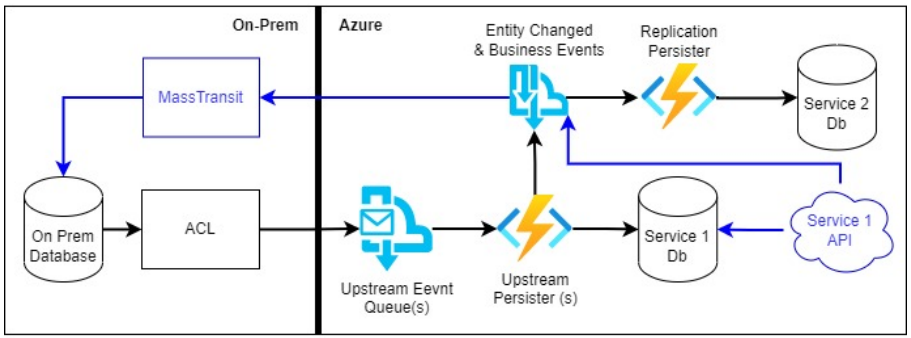

Our solution comprised these integral components:

- ACL: A legacy component tasked with detecting alterations within the on-prem database and subsequently triggering events that are relayed to the cloud.

- Upstream Components: These cloud-based elements encompass a series of filtering, transforming, and persisting actions applied to changes. They are meticulously designed to anchor the modifications within the entity’s designated domain in the cloud. Moreover, these components generate replication events that can trigger responsive actions as required.

- Replication Components: Positioned in the cloud, these components specialize in receiving the replication events. They then proceed to either store the data or execute specific actions in response to the received events.

- MassTransit: In scenarios where cloud-induced changes necessitate synchronization back to the on-prem database, MassTransit steps in. This tool plays a pivotal role in reading all events generated in the cloud, forwarding them to downstream components, thus orchestrating the synchronization of changes.

Collectively, these components form a coherent framework that orchestrates the intricate dance of data synchronization between on-premises and cloud-based systems.

The achievement of two-way synchronization hinged on the utilization of key features within our product. These components included:

- Table-to-Table Data Synchronization: Our solution facilitated seamless data synchronization between on-premise and cloud databases, or vice versa. This process was orchestrated via an event-driven architecture, ensuring a fluid exchange of information.

- Change Capture Service for On-Prem Changes: In cases where alterations occurred on the on-premise side, a change capture service meticulously detected these changes and initiated corresponding events. These events were then synchronized to the designated home domain, simultaneously triggering notifications for other domains to synchronize their respective data, if deemed necessary.

- Cloud-Initiated Changes and Data Replication: Conversely, when changes manifested in the cloud, our solution orchestrated their transmission to the on-premise data replication service. This was achieved through a streamlined event-driven approach.

While much ground can be explored in the realm of on-premise to cloud migration, ongoing innovation, such as the integration of tools like CodeGPT, is consistently expanding the avenues for executing migrations. However, to stay focused on the core subject matter at hand, let’s get into the tips that can help alleviate the anxieties associated with these migration endeavors.

Tips for Migration Success

How can you ensure your next migration is successful? Don’t miss these crucial opportunities to simplify and combat the complexities of your migration.

1. Plan for Shorter and Early Test Cycles

Just as integrating and commencing testing early is pivotal in microservices architecture, kickstart the migration process early within the testing cycle. Incorporate numerous testing cycles to optimize the migration process. Our recommendation is to embark on five or more testing cycles. It’s of utmost importance that these cycles unfold in near-real-time production-like environments, replicating data closely resembling the production setting. Morphing tools can be employed to transplant sanitized production data into a staged environment.

Recommended reading: Continuous Testing – How to Measure & Improve Code Quality

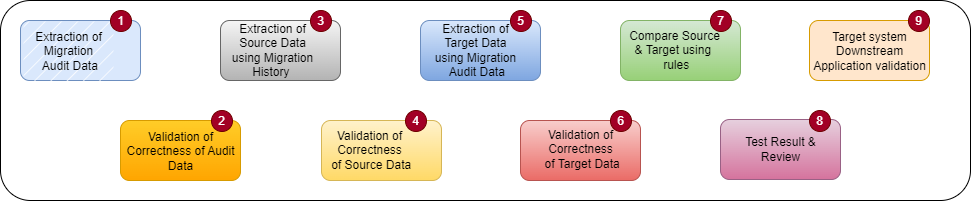

2. Formulate a Comprehensive Validation Strategy

Leave no stone unturned when validating the migrated data. Thorough validation is essential to prevent financial losses or the risk of alienating customers due to a subpar post-migration experience. Here is an exemplary set of validation steps tailored for the post-migration scenario:

3. Initiate with Beta Users

Start the migration process by selecting a group of Alpha and Beta users who will serve as pilots for the migrated data. This preliminary phase aids in minimizing risks in the production systems. Handpick Alpha and Beta users carefully to ensure a smooth transition during live data migration. Alpha users constitute a smaller subset, perhaps around a hundred or so, while Beta users encompass a slightly larger group, potentially comprising a few thousand users. Eventually, the transition is made to a complete dataset of live users.

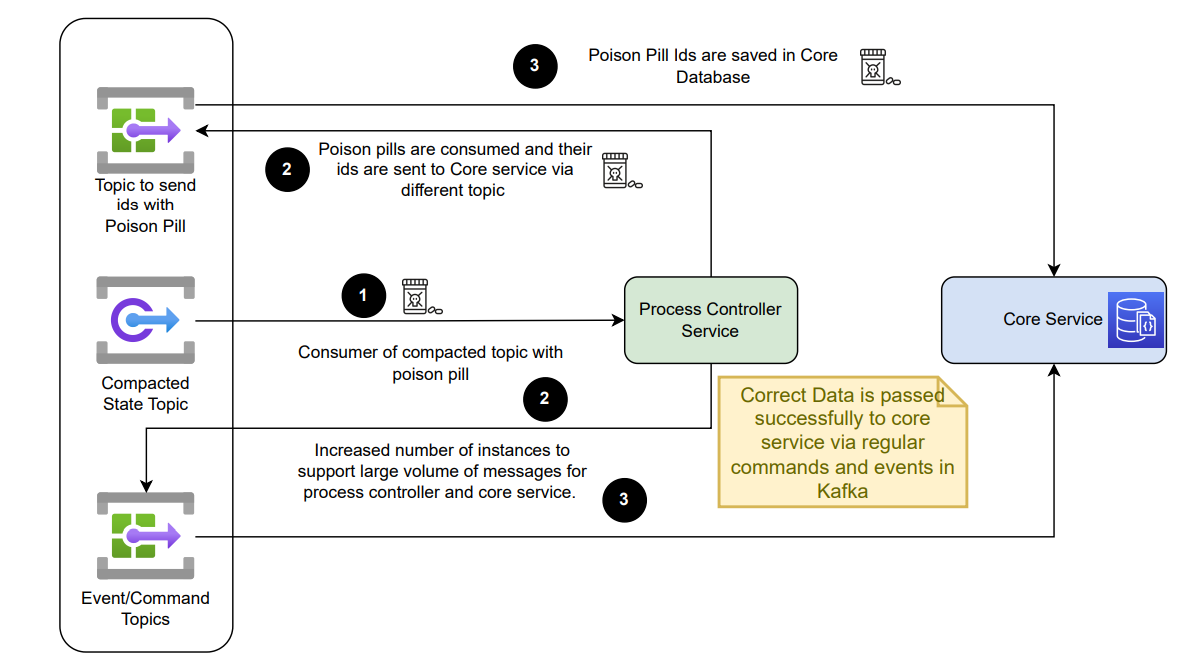

4. Anticipate Poison Pills

From the outset, plan for poison pills – records in Kafka that consistently fail upon consumption due to potential backward compatibility issues with evolved message schemas. Regularly checking for poison pills in production is a proactive measure to avert last-minute obstacles. Here’s a workflow that illustrates how to address poison pills:

5. Craft a Robust Rollback Strategy

Collaborate with clients to establish a comprehensive rollback strategy, ensuring that expectations are aligned. Conduct mock-run tests of the rollback strategy to preemptively address potential emergencies, as this could be the ultimate recourse to salvage the situation.

6. Seek Assistance When Available

If feasible, consider enlisting paid support to bolster your efforts. For instance, our client benefitted from licensed MongoDB support, utilizing allocated hours to enhance system performance and migration scripts. Such support often introduces a fresh perspective and intimate knowledge of potential challenges and solutions, making it invaluable during the migration process.

7. Incorporate Early Reviews

Be proactive in seeking reviews of the migration architecture from both clients and internal review boards. This diligence is vital to identify any potential roadblocks or discrepancies before they pose real-world challenges. By preemptively addressing issues raised during reviews, you can avoid last-minute complications, such as instances when a migration plan contradicts client policies, necessitating adjustments and improvements.

Conclusion

The vision of a seamless transition to a cutting-edge new system is an alluring prospect for enterprises, promising improved efficiency and enhanced capabilities. However, the journey from outdated systems to a technologically advanced future state is often fraught with challenges, and the alarming statistic that 70% of digital transformations end in failure, as highlighted by McKinsey, is a stark reminder of the complexities involved. Among the key contributors to these failures are unsuccessful migration endeavors, which underscore the critical importance of addressing migration apprehensions.

Indeed, the term “heebie jeebies” aptly encapsulates the anxiety that often accompanies migration processes. The anxiety can be attributed to a range of factors, including poor planning, exceeded timeframes, and unexpected roadblocks. Yet, as this article has explored, there are proven strategies to counter these challenges and achieve successful migrations. By embracing approaches such as shorter and early test cycles, comprehensive validation strategies, staged rollouts with Beta users, preparedness for potential obstacles like poison pills, and crafting effective rollback plans, enterprises can greatly mitigate the risks and uncertainties associated with migrations. Seeking expert assistance and incorporating early reviews also play crucial roles in ensuring a smooth migration journey.

The diverse types of migration covered in this article, from conventional data migration to custom solutions and on-premise to cloud transitions, demonstrate the range of scenarios and complexities that organizations may encounter. By diligently adhering to the strategies outlined here, enterprises can navigate the intricate dance of data synchronization and system transitions with confidence. As the digital landscape continues to evolve, embracing these best practices will not only help ease the “heebie jeebies” but also pave the way for successful digital transformations that empower organizations to thrive in the modern era.

Reach out to the GlobalLogic team for digital advisory and assessment services to help craft the right digital transformation strategy for your organization.

More helpful resources: